深度学习

Environment Setup

!pip install numpy scipy matplotlib ipython scikit-learn pandas pillow

Introduction to Artificial Neural Network

Activation Function

Step function

import numpy as np

import matplotlib.pylab as plt

def step_function(x):

return np.array(x>0, dtype=np.int)

x = np.arange(-5.0, 5.0, 0.1)

y = step_function(x)

plt.plot(x, y)

plt.ylim(-0.1, 1.1)

plt.show()

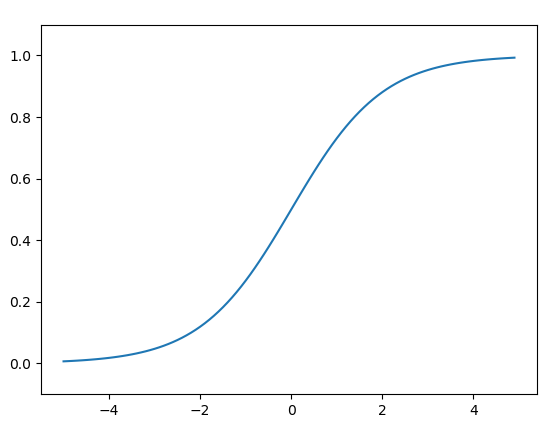

Sigmoid Function

import numpy as np

import matplotlib.pylab as plt

def sigmoid(x):

return 1 / (1 + np.exp(-x))

# x = np.array([-1.0, 1.0, 2.0])

# print(y)

x = np.arange(-5.0, 5.0, 0.1)

y = sigmoid(x)

plt.plot(x, y)

plt.ylim(-0.1, 1.1)

plt.show()

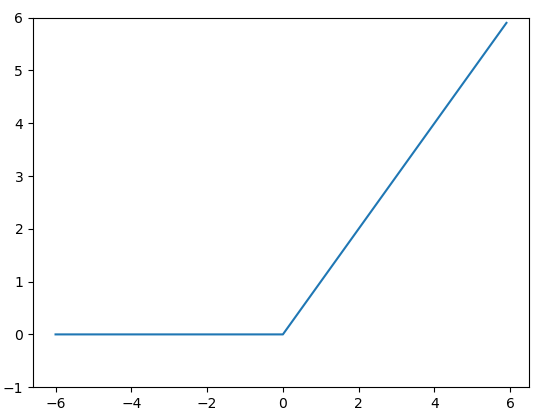

Relu Function

$$ f(x)=max(0,x) $$

import numpy as np

import matplotlib.pylab as plt

def relu(x):

return np.maximum(0, x)

# x = np.array([-5.0, 5.0, 0.1])

# print(y)

x = np.arange(-6.0, 6.0, 0.1)

y = relu(x)

plt.plot(x, y)

plt.ylim(-1, 6)

plt.show()

损失函数

平方和误差

Sum of squared error $$ E = \frac{1}{2} \sum_{k} (y_k -t_k)^2 $$

Cross Entropy error

$$ E = - \sum_k t_k log\ y_k $$

References

Archives

2019/03 (14) 2020/08 (1) 2021/01 (2) 2021/05 (2) 2021/12 (2) 2022/03 (2) 2022/04 (2) 2023/12 (2) 2024/01 (5) 2024/04 (1) 2024/05 (1)Tags

Recent Posts